By Veronica Brinkley

As AI models have advanced, it has become increasingly evident that they will play an important role in the future of humankind. Current models have a range of capabilities that are supposedly designed to aid humans. For instance, photo-generating models like MidJourney and DALL-E have the ability to create images in a multitude of styles, based on the user’s prompt. These programs’ outputs have become increasingly accurate to the point that, to the average viewer, they are often indiscernible from real photographs.

The language model ChatGPT is garnering the most media attention. ChatGPT is advancing rapidly; it’s already on its fourth version. The lab behind it, OpenAI, has stated that the company is building towards an ambitious goal — artificial general intelligence (AGI), their term for an AI that is as smart as, if not smarter than, the average human.

These developments have raised alarms in the tech community. Recently, over 1,000 major tech executives — including Elon Musk — professors, and scientists signed an open letter directed toward OpenAI, requesting an immediate sixmonth pause in artificial intelligence development. The main concern posited by the letter is that “AI systems with human-competitive intelligence can pose profound risks to society and humanity,” and therefore necessitate governmental regulation. The letter went on to say that AI development labs are “locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one — not even their creators — can understand, predict, or reliably control.”

OpenAI, for its part, says its technology will improve society. According to their website, advancements in AI “could help us elevate humanity by increasing abundance, turbocharging the global economy, and aiding in the discovery of new scientific knowledge that changes the limits of possibility.” To me, this just sounds like a lot of buzzwords and it doesn’t really say much about what they intend to do with their product.

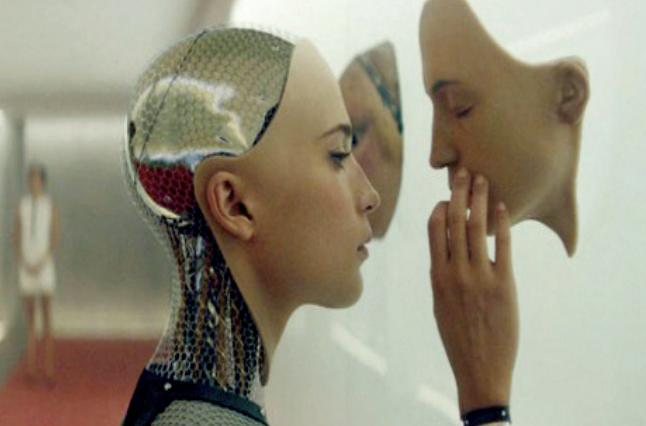

Now, if you’re like me, you’re probably thinking, “I swear I’ve seen this in a movie, and it did not end well.” It’s scary to see the beginning of the march to machine intelligence. Science fiction centered around AI previously felt abstract, but now seems potentially accurate. My personal favorite example is the dystopian sci-fi video game “Detroit: Become Human.”

Quantic Dream’s “Detroit: Become Human” is set in the not-so-distant future of 2038, in an America where highly-developed androids have become commonplace. The economy is completely dependent on them as the means of production. However, the androids begin to gain sentience and deviate from their programming. This sparks a civil rights movement of ‘deviant’ androids. A power struggle ensues, as humans refuse to accept androids as autonomous beings. While current AI is far from this reality, it is a chilling projection of what could be in store. The game itself directly comments on this reality in its opening lines: “Remember, this isn’t just a game, it’s our future.”

Drawing parallels between the story of “Detroit: Become Human” and our current social trajectory is hardly difficult. CyberLife, the AI research and development firm in the game’s setting, represents a potential future for OpenAI. In the game, CyberLife has become the standard for androids and therefore holds an immensely disproportionate amount of power over the economy and ruling bodies. Perhaps we aren’t as far away from this reality as we think. In order to prevent such a future, industries need to change to accommodate AI, a technology that is only growing faster, smarter and more powerful. This is where the government must step in. It must regulate the creation of AI very consciously.

In a perfect world, state leaders consciously and unerringly regulate the creation of AI, acting free from considerations of profit and power. However, as we know, the government doesn’t have a great history of neutrality or altruism. And few in Washington even understand technology — just watch the Congressional hearings on Facebook or TikTok for examples. Washington has already failed to stay ahead of tech decisions that affect millions. OpenAI has been seemingly honest about these concerns, stating, “we hope for a global conversation about three key questions: how to govern these systems, how to fairly distribute the benefits they generate, and how to fairly share access.” While it’s great that they ‘hope’ for this outcome, I do not believe that hoping is enough. Improper handling and regulation could have catastrophic effects on society, as seen in the manipulation and misuse of current social media. “Detroit: Become Human” may not be far off.

As college students who are just beginning to enter the job market, these advancements could easily affect us in the near future. Entry-level positions might be displaced by AI and it may become increasingly difficult for us to find meaningful work. In terms of possible issues with the use of this technology, this is just the tip of the iceberg.

So many of us grew up watching events like this happen in the movies and on TV, and it is hard to believe what was once science fiction is beginning to exist. It’s also alarming to watch it unfold, knowing it could impact our futures. But we are not helpless. We can stay informed about technological advancements. We can slow their deployment until the ramifications are understood. We can apply the lessons learned from fictional media and the real-life corruption of social media. And we must consider enacting accompanying regulations on tech industries. We should be mindful that technology is not always a wonder — especially in the hands of mere mortals.